Japanese babies are upending the world of linguistics, one mora at a time

What works for English is not universal when it comes to language learning

April 3, 2017

Amanda Alvarez

This post originally appeared on Neurographic

Image: Helen Hyde via Wikimedia Commons, public domain

Nissan, Toyota, Honda — three universally recognized car manufacturers, two of which are also common Japanese surnames. If you ask an English speaker to tell you which name is longest, they’ll say Toyota, with its three syllables. A Japanese speaker, on the other hand, will say Nissan. This difference reveals a lot about the underlying rhythms used in human vocal communication, says developmental neurolinguist Reiko Mazuka of the RIKEN Brain Science Institute, and also casts doubt on universal theories of language-learning that are mostly based on studies of English speakers.

So why is Nissan longer to Japanese ears? It’s all based on ‘mora’, a counter of linguistic rhythm that is distinct from stress (like in German or English) or syllable (like in French). Most people are familiar with this pattern of counting from haiku, the five–seven–five pattern that defines the classical Japanese meter. The ‘syllables’ in haiku aren’t really syllables but rather morae. Nissan thus has four morae of equal duration (ニッサン or ni-s-sa-n), rather than just the two syllables picked up by Western ears.

But Japanese babies are not born with an internal mora counter, and this is what got Mazuka so interested in returning to Japan from the United States, where she completed her PhD and still maintains a research professorship at Duke University. “The Japanese language doesn’t quite fit with the dominant theories,” she says, “and I used to think this was because Japanese was exceptional. Now my feeling is, Japanese is not an exception. Rather, what works for English is not universal” in terms of mechanisms for language learning.

Mazuka’s lab on the outskirts of Tokyo studies upwards of 1,000 babies a year, and she explains that infants start to recognize mora as a phonemic unit at about 10 months of age. “Infants have to learn how duration and pitch are used as cues in language,” she says. And Japanese is one of the few languages in the world that contains duration-based phonemic contrasts — what English speakers think of as short and long vowels, for example — that can distinguish one mora from two morae. Dominant ideas in the field suggest all babies, from birth, use rhythm as a ‘bootstrap’ to start segmenting sounds into speech, first identifying whether they are dealing with a syllable- or stress-based language. “The theory says that rhythm comes first, but Japanese babies can’t count morae until they’re almost a year old,” Mazuka exclaims. “You can’t conclude that rhythm is the driving force of early phonological development, when evidence from Japanese babies shows it’s not an a priori unit.”

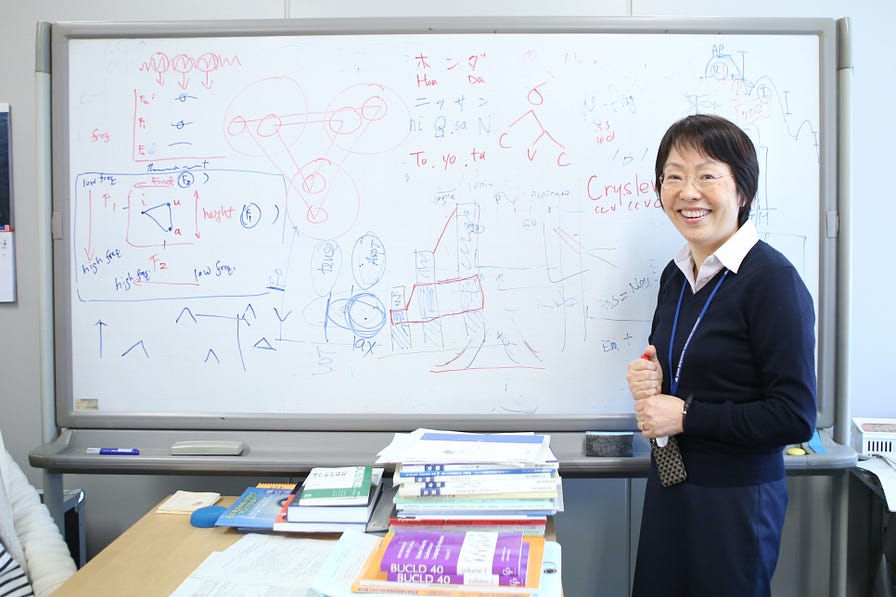

Reiko Mazuka in her lab. Image: Tomoko Nishiyama/RIKEN Brain Science Institute

Another conventional wisdom in linguistics has held that babies undergo a ‘perceptual narrowing’ as they grow, becoming more sensitive to features of their native language while disregarding the subtleties in others. The classic example is the difficulty of Japanese adults to distinguish ‘r’ from ‘l’ sounds, since their native language does not have this distinction (though interestingly, Japanese speakers are as good as English speakers at telling these sounds apart when they are presented in isolation rather than in a linguistic context).

But Mazuka and colleagues have shown that babies can actually get better at making certain speech discriminations as they get older, even without any exposure to these sounds. At 10 months, but not four-and-a-half months of age, Japanese infants could tell apart non-native sounds like ‘bi:k’ and ‘be:k’, while Japanese adults could discriminate most of the vowels inserted between ‘b’ and ‘k’. The point, says Mazuka, is that these sounds don’t signal the presence of a phoneme in Japanese, but they do in German, a language to which neither the babies nor the adults had been exposed. Models of language-learning like Native Language Magnet or Perceptual Assimilation can’t predict that such an improvement in discrimination could happen. “Perceptual narrowing is not the complete story,” says Mazuka. “It’s probably part of larger changes in the brain during the first year of life, such as hemispheric lateralization, that coincide with infants starting to treat certain sounds as speech.”

Phonological illusions are another useful indicator of when infants begin to understand the rules of their native language. ‘Legal’ syllables in Japanese, for example, always have a vowel following a consonant. ‘Illegal’ syllables like ‘abna’ are usually misheard (in this case, as ‘abuna’) by Japanese adults, who mentally repair the syllable by inserting an illusory vowel. Mazuka has found that by 14 months of age, Japanese infants start to experience this same phenomenon, known as vowel epenthesis.

Results like these from Mazuka and others in the field are converging on the idea that, when it comes to language, babies are voracious statistical learning machines. Once they’re exposed to enough speech sounds, they start to understand when pitch and durations are phonemically significant or how subtle changes in articulation alter the meaning of a word. Mazuka and colleagues have even collected a corpus of Japanese mothers’ ‘baby talk’, recordings of infant-directed speech that have revealed, strangely enough, that moms tend to mumble rather than hyperarticulate to their babies. Another study of corpora from nine languages suggests that the brain may indeed use a statistical approach to telling words apart in the flow of speech, learning “to use the segmentation algorithm that is best suited to one’s native language, [leading] to difficulties segmenting languages belonging to another rhythmic category.” Japanese, standing on its own in the rhythmic category based on morae, may thus present extra challenges for language learners; native Japanese speakers will have the opposite problem of understanding syllable- and stress-timed languages.

Mazuka is determined that, with more data on tonal languages in Asia and Africa and other types of languages like Japanese, established notions of language acquisition may get a re-write. “More than half of the world’s population speaks tone languages, yet only scant attention has been paid to tone languages in classifying the world’s languages into three rhythm types [syllable, stress, mora].” In fact, a different way of classifying linguistic rhythm may emerge when the full range of tone languages are analyzed, similar to how the notion of musical rhythm in Western music changed with the introduction of rhythms from African music. “As the only major language using morae, Japanese is a lonely outlier, but now that I’m near the vast Asian continent, I realize that none of these languages are in the system! The study of language development has skewed for a long time towards English, but now it’s time to fix the skew.” With a brand-new grant, Mazuka is helping to set up baby labs in Thailand, South Korea and Hong Kong. “I want to crack a few more Asian languages. Japanese isn’t alone in having all these interesting features. Fundamentally, we want to know how much language is driven by statistical learning from input versus innate biological mechanisms. We can’t test this if we lack data from major languages in the world.”